And got this interesting image. You can make out the murky outline of teapot if you squint hard. What's going wrong? With new teapot code, a new foreign function interface for the OpenGL bindings, running in Lisp, rather a lot could be going wrong. And I spent an embarrassingly long time chasing down various blind alleys until I did what I should have done first: read the man page for glutSolidTeapot (in my defense, I was on vacation without a broad-band connection, so googling wasn't my first instinct). Hey look, there's a big "Bugs" section!.

And got this interesting image. You can make out the murky outline of teapot if you squint hard. What's going wrong? With new teapot code, a new foreign function interface for the OpenGL bindings, running in Lisp, rather a lot could be going wrong. And I spent an embarrassingly long time chasing down various blind alleys until I did what I should have done first: read the man page for glutSolidTeapot (in my defense, I was on vacation without a broad-band connection, so googling wasn't my first instinct). Hey look, there's a big "Bugs" section!.It turns out that we're looking at the inside surfaces of the teapot. Because their surface normals point away from the light source behind the viewer's head they are very dark. But those surfaces are supposed to be culled, and furthermore, the front surfaces of the teapot should not be! OpenGL can use the order of a polygon's vertices to decide if a polygon is facing away from the eye point and can be discarded. By default the order is assumed to be counterclockwise. If we turn off back-face culling we get a normal image, but that's not a happy state of affairs. In order to display the Glut teapot, according to the man page, you must change the vertex order to clockwise with

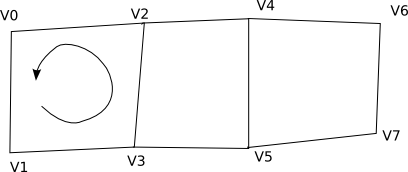

(glFrontFace GL_CW) and then everything's happy. Fair enough, but why?There's an unfortunate interaction between the OpenGL functions for evaluating Bezier meshes and the "quad strip" primitives that they use to display the approximation of the mesh on the screen. The quad strip looks like this:

That is, the vertices v0, v1, v2, v3, v4, ... are fed to OpenGL using glVertexf and the quads are drawn using the vertex order given by the arrow, which in this image is counterclockwise, making these quads front-facing. Now, the mesh produced by OpenGL for a Bezier surface might look like this (sorry for the lame diagram!):

That is, the vertices v0, v1, v2, v3, v4, ... are fed to OpenGL using glVertexf and the quads are drawn using the vertex order given by the arrow, which in this image is counterclockwise, making these quads front-facing. Now, the mesh produced by OpenGL for a Bezier surface might look like this (sorry for the lame diagram!): u and v are the parameters of the mesh and increase in the directions of the arrows. The glEvalMesh2 function proceeds in the u direction of the mesh creating quad strips. That is, if we start at u = 0 v = 0 (do I need MathML here?), then the first few coordinates of the first quad strip will be (0,0), (0,dv),(du,0),(du, dv),(2du,0),(2du,dv),... and then another strip will be started at (0,dv). But look again at the diagram of quad strips above; this vertex order produces quads that are facing away from the viewer! We can't reorder the points and put the origin of the mesh parameters at another corner of the mesh because the same thing can happen. We could try to swap u and v, but that causes another, worse problem: we're relying on the evaluator to generate surface normals for us, and the normal direction is defined by the cross product of du and dv on the mesh. The right-hand rule rules here; if we swap u and v, the normals will point away from us, which is also not good. Are we doomed to live with this cheesy clockwise order?

u and v are the parameters of the mesh and increase in the directions of the arrows. The glEvalMesh2 function proceeds in the u direction of the mesh creating quad strips. That is, if we start at u = 0 v = 0 (do I need MathML here?), then the first few coordinates of the first quad strip will be (0,0), (0,dv),(du,0),(du, dv),(2du,0),(2du,dv),... and then another strip will be started at (0,dv). But look again at the diagram of quad strips above; this vertex order produces quads that are facing away from the viewer! We can't reorder the points and put the origin of the mesh parameters at another corner of the mesh because the same thing can happen. We could try to swap u and v, but that causes another, worse problem: we're relying on the evaluator to generate surface normals for us, and the normal direction is defined by the cross product of du and dv on the mesh. The right-hand rule rules here; if we swap u and v, the normals will point away from us, which is also not good. Are we doomed to live with this cheesy clockwise order?Well, no. Actually we don't have to evaluate the mesh from 0 to 1 in u in v: the glMap2f function takes arguments for the start and end values of u and v. If we evaluate v from 1 to 0:

(loop

with u-order = (u-order patch)

and v-order = (v-order patch)

for patch-list in (patches patch)

do (loop

for patch in patch-list

do (glMap2f GL_MAP2_VERTEX_3 0.0 1.0 3 u-order 1.0 0.0 12 v-order

patch)

(glEvalMesh2 type 0 grid 0 grid)))

then the vertices in the quad strips define quads with the proper orientation:

Yay! We have our teapot.

1 comment:

Hey, cool!

I'll have to bookmark glouton in my «Must Try»-list. :)

Post a Comment